If the existing communication model has some fake news gaps, what can companies do?

We won’t be able to address all possible routes of fake news impacting on our communication model, so let’s be prepared. Here are a few ideas for you to consider.

See yourself from the outside

It’s critical that you understand how others see you and your brand – particularly when it comes to fake news.

A good place to start is to take an inventory of the feedback or signals you’re receiving from customers, consumers generally, and online – This allows you to develop a deeper understanding of the way the world perceives you. Take stock of how fake news is or isn’t impacting your brand, and where your vulnerabilities might be. With this you can sort out how to allocate your limited resources – whether its dealing with direct fake news impact, better defining your value with customers, engaging people IRL, creating content, or others.

As you think about how to best allocate your resources in the context of fake news consider what sources people are telling us they trust, customize to your business, and adjust based on additional data. For example (PDF): While many place blame on traditional media for fueling fake news, according to the most recent Edelman Trust Barometer, people have become more engaged with it this year and trust it more than other sources.

Prepare for impact

While it’s difficult to predict and then prepare for a scenario in which you or your brand are on the wrong end of a fake news firestorm, by investing now you’ll be better prepared to manage it.

Define your brand’s internal mental model for fake news and its potential impact on you – This will help you “speak the same language” internally in the event of a crisis.

Develop a shared understanding of impact and how you’ll measure it (this is a really really hard problem, don’t underestimate it). This means you and your leadership should agree on the metrics, insights, and analysis that answer difficult and abstract questions like, “Does this fake news matter?”, “How bad is it?”, or “Is now the time to take action?”.

Invest in your team and technology

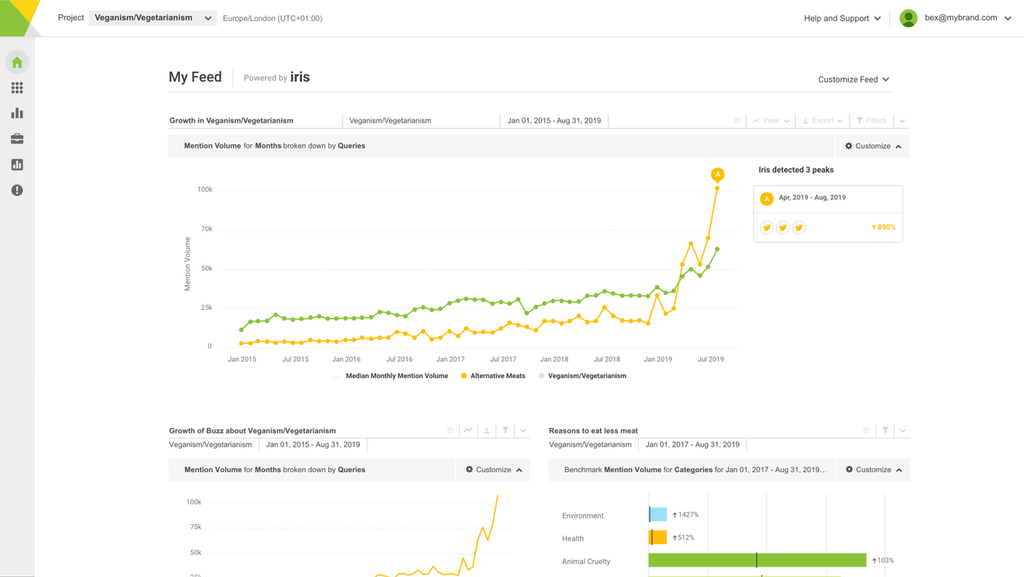

Invest in the data, technologies, and tools that enable you to detect and measure fake news’ impact on your brand. This means everything from social media, to market research, to customer feedback, and more.

Your people are what make that data and technology useful – constantly invest in them. Give them feedback. Hold them accountable. Let them hold you accountable.

Define a process

If you’ve defined your terms and invested in your people and technologies, you’re ready to wrap them up in a process that connects your various teams in order to efficiently make decisions and allocate resources in the event of a fake news impact.

This process will need to be flexible enough to allow for a variety of fake news scenarios (and others), but clear enough to quickly assemble decision-makers. The success of fake news is at least partially determined by its ability to re-direct resources and attention, so if you’re making better decisions about your resources in a fake news crisis, you’re already better managing it.

You might take it one step further and take my crudely modified communications model figure from above (yes, that “attention-grabbing” one) and use it to red team each point in your process – This will only make it more robust.

Reminder: This process shouldn’t only be reactive; if you’re identifying knowledge gaps now you can start filling them now by creating content, engaging audiences, communicating, and building trust.